Ansys Advantage Magazine

Discover Excellence in Engineering Simulation

Each issue of Ansys Advantage showcases customers’ stories and important trends across a variety of industries. Learn how engineering simulation is accelerating product development, adding value and reducing costs.

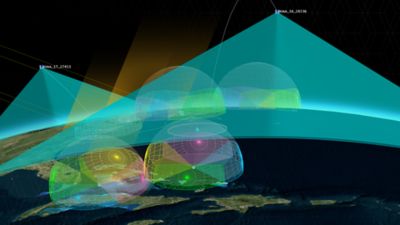

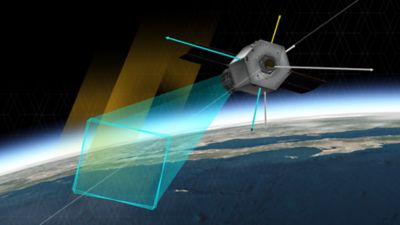

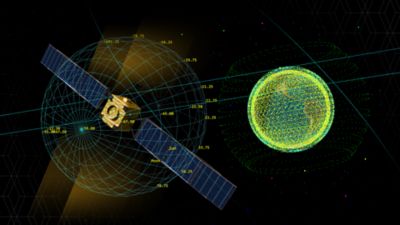

Simulating Space

"The ability to model entire system-of-systems architectures, evaluate thousands of design alternatives in real time, and simulate mission scenarios before launch is a game-changer — one that will determine which organizations lead in this new space economy."

— James Woodburn, Ansys Fellow, Ansys Government Initiatives

In This Issue:

Plan the Mission

Design the Assets

Protect the Assets

Take the Moonshot