Ansys博客

September 29, 2022

仿真如何创建混合现实

作为结合物理和虚拟世界的众多优势的标准载体,仿真无疑是扩展现实(XR)最新进步的核心。这是虚拟现实,增强现实和混合现实等技术的总称,以及快速发展的技术所包含的所有可穿戴设备,传感器,人工智能(AI)和软件。这是一个相当大的伞,应用于娱乐,医疗,员工培训,工业制造,远程工作, 以及更多已在使用中的应用,以及更多即将出现的应用。

由于目前还在定义XR,因此很难掌握它的大小。一些分析师将2021年后端市场估值为310亿美元,预计到2024年将达到3000亿美元。 1如果这些预测甚至接近于现实,那么无论大小的公司现在都肯定会有大量新的XR-产品,应用和开发经验。但是,需要什么才能使XR实现这一潜力?

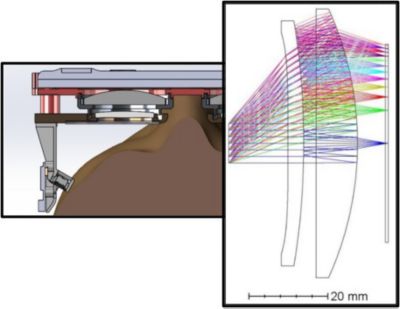

光学器件使扩展现实(XR)成为焦点,但与传统的相机镜头不同的是,XR采用了更紧凑的波导和“薄片镜头”设计。

或许将术语XR(下称XR)分解为单独的部分会有所帮助;这里提供了一个很好的评论 。如该文章所述,增强现实(AR)使您能够在真实世界的视图顶部添加虚拟图像,而虚拟现实(VR)使您完全远离现实世界,并在不同程度上沉浸在虚拟世界中。顾名思义,混合现实(MR)是AR和VR的混合。

什么是混合现实?

混合现实将我们的物理世界与数字资产融合在一起,使虚拟对象能够实时与现实世界进行交互。

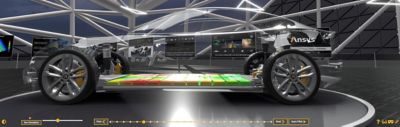

想象一下,一位结构工程师和一位电子工程师站在同一个房间里,彼此相见,并看到一个漂浮在现实世界中的电机虚拟模型。他们可以像在计算机屏幕上一样旋转模型,放大模型或查看模型内部以查看其组件。他们还可以绕过它或将它放在物理汽车的发动机舱中,以查看它如何适应实际空间,更好地了解它如何与其他系统组件交互,并在不丢失重要的口头和非口头沟通提示的情况下进行协作。当然,也不是每个人都需要在同一个房间里。如果发动机的交互式3D模型可以在真实房间中投影,为什么不能在1,000英里外的另一位工程师的3D全息图?

在教室,手术室,工厂车间以及其他地方,很容易想象出类似的场景。为了最大限度地发挥MR的优势,需要麦克风,摄像头和传感器(如加速度计,红外探测器和眼动追踪器),以及快速连接到Cloud上的数据。为了充分利用这些技术的功能,用户必须佩戴耳机才能体验到全面的效果。

手术室只是混合现实的一个用例。它可以用作更有效的培训工具或帮助指导外科医生。

确保Optics正确

要创建所需的MR,必须首先解决光学设计组件和挑战。耳机需要小巧轻便,因此佩戴舒适,甚至时尚,每天可在数小时内使用。数字图像必须足够明亮,才能在所有照明条件下(包括晴天)进行查看。实现高分辨率和景深(DOF或聚焦图像中最近点和最远点之间的距离)也是一项挑战。

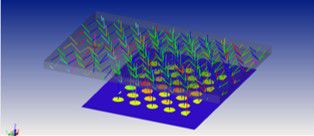

为了创建最真实,最准确的MR环境,公司正在使用光学仿真工具(如 Ansys Zemax OpticStudio 和Ansys Lumerical产品)来设计光学系统。OpticStudio和Lumerical可在宏观和亚波长尺度上提供完整的集成解决方案,从而帮助您实现并优化MR应用的光学设计目标。OpticStudio可以在宏观尺度上仿真整个系统,而Lumerical可以仿真越来越多地用于提高性能和减小这些系统尺寸的衍射组件,这需要亚波长物理。

同时使用OpticStudio和Lumerical,您可以设计设备中的所有光学组件,从光源到人眼。优化和公差功能有助于确保您的设计成功,并确保您的产品可制造。这一切都是使用用于光线跟踪,有限差分时域(FDTD)和严格耦合波分析(RCWA)的模型和工具完成的,并由强大的求解器提供支持。

并联使用Lumerical和OpticStudio的示例可通过在XR应用中常见的波导设计和“薄片透镜”设计进行说明。在光学领域,波导符合其名称,因为它们可以引导光波。扁形设计(也称为折叠光学器件)使镜头和显示器更紧密地结合在一起,从而实现更紧凑的设计,同时最大限度地减少不必要的移动。

扁平透镜设计(左)和波导设计(上图)的示例

为了优化波导设计,您可以通过使用衍射光栅的光学系统部分仿真光的行为,组件可以设计为以高效率向不同方向扩散或分散光。在宏观尺度上,您可以使用OpticStudio仿真整个光学系统中的光行为。

为了增强VR煎饼设计,您可以使用Lumerical来仿真镜头的光学滤波器,包括其弯曲偏振器和四分之一波板,然后在OpticStudio中仿真整个系统,以检测和预测鬼影图像。

此外,还可以动态链接Lumerical和OpticStudio,以提供无缝和深度集成。这种连接的工作流程可实现快速的设计迭代,对于系统的整体优化和公差非常重要。公差分析可检查预期制造和装配误差的影响,以确保所有模型规范得到适当平衡,从而实现您所需的光学设计。

仿真未来的变革

要了解如何Ansys通过增强光学设计来帮助您实现MR目标,请注册 OpticStudio免费试用或了解有关Lumerical FDTD的 更多信息。

一位艺术家在混合现实环境中渲染电动汽车储能系统。