-

-

Software gratuito per studenti

Ansys potenzia la nuova generazione di ingegneri

Gli studenti hanno accesso gratuito a software di simulazione di livello mondiale.

-

Connettiti subito con Ansys!

Progetta il tuo futuro

Connettiti a Ansys per scoprire come la simulazione può potenziare la tua prossima innovazione.

Paesi e regioni

Customer Center

Supporto

Partner Community

Contatta l'ufficio vendite

Per Stati Uniti e Canada

Accedi

Prove Gratuite

Prodotti & Servizi

Scopri

Chi Siamo

Back

Prodotti & Servizi

Back

Scopri

Ansys potenzia la nuova generazione di ingegneri

Gli studenti hanno accesso gratuito a software di simulazione di livello mondiale.

Back

Chi Siamo

Progetta il tuo futuro

Connettiti a Ansys per scoprire come la simulazione può potenziare la tua prossima innovazione.

Customer Center

Supporto

Partner Community

Contatta l'ufficio vendite

Per Stati Uniti e Canada

Accedi

Prove Gratuite

ANSYS BLOG

April 16, 2020

Perception Algorithms Are the Key to Autonomous Vehicles Safety

It has taken millions of years for humans to evolve our biological perception algorithm capable of driving cars, operating machinery, piloting aircraft and navigating ships.

Engineers aim to develop sensors and artificial intelligence (AI) that will eventually be capable of outperforming eyes, ears and brains that have been fine-tuned by fierce predators, natural disasters, hunting and foraging.

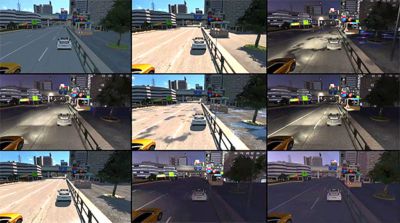

Representation of an autonomous car’s perception algorithm

The good news is that engineers can mimic the way nature forged us. They could run the perception algorithms through millions of tests to train them to safely detect and react to road conditions and objects that have been labeled and identified.

Currently, this process has brought autonomous vehicles (AVs) and advanced driver assistance systems (ADAS) to Level 2 or Level 3 autonomy. In these scenarios, vehicles are able to take over the control of some functions — like an emergency brake and lane assist. But in the end, humans are still behind the wheel of these vehicles.

Levels of autonomy based on the Society of Automotive Engineers’ (SAE International) guidelines.

Before we are confident in the ability of fully autonomous vehicles to reduce fatalities and injuries, it is estimated that a fleet of hundreds would need to be driven for almost 9 billion miles.

So, how can engineers ensure these systems without waiting hundreds of years to complete these tests? The answer is digital testing and simulation.

It’s Infeasible to Test Autonomous Vehicle Perception Algorithms Manually

The ADAS systems associated with Level 2 autonomous driving are already an amazing safety feature. They could potentially prevent a third of all passenger-vehicle crashes — reducing associated injuries by 37% and deaths by 29%. So, imagine the potential of implementing fully autonomous vehicles.

To make that jump in technology, engineers still need to process those millions of miles of road tests. Since it could take 800 human hours to label the objects and road conditions in one hour of driving footage, performing these tests manually isn’t an economical workflow.

The efforts needed to manually develop an autonomous

vehicle perception algorithm

To address this challenge, engineers can use Ansys SCADE Vision powered by Hologram. It doesn’t require labeled data to identify issues with the perception algorithm. Instead, it runs the raw driving footage through the algorithm’s neural network. Then, it slightly modifies the video (typically by blurring the images) and runs it through the network a second time. This process enables the software to compare the result of the two tests to determine edge cases by noting where the network made weak detections and false negatives.

Using this system, engineers can drastically reduce the amount of time spent labeling driving footage. To learn more, read the article: Autonomous Safety in Sight.

Simulations Simplify Perception Algorithm Testing

What happens when engineers are unable to capture scenarios using driving footage? First, it could be dangerous, time-consuming and expensive to set up these scenarios. Secondly, certain edge cases might be so obscure that it’s unlikely to ever witness them during a test drive.

In these cases, Ansys VRXPEREINCE Driving Simulator powered by SCANeR could be a great alternative. Instead of relying on real-world data, engineers can use this technology to create a virtual driving experience.

Ansys VRXPERIENCE Driving Simulator powered by SCANeR automatically

creates known scenarios to test perception algorithms.

Once the virtual environment is created, the software can automatically alter the simulation to see how the perception algorithm would react to various driving, weather and safety scenarios. So, instead of waiting for an edge case to occur on camera, engineers can set up the virtual environment to cycle through millions of these scenarios.

This tool could drastically reduce the time and cost to evaluate AV and ADAS systems as it eliminates the need to manually label footage and reduces the amount of physical testing needed to validate the technology. To learn more, read the article: Engineer Perception, Prediction and Planning into ADAS.

Any and all ANSYS, Inc. brand, product, service and feature names, logos and slogans such as Ansys, Ansys SCADE and Ansys VRXPEREINCE are registered trademarks or trademarks of ANSYS, Inc. or its subsidiaries in the United States or other countries.