-

-

학생용 무료 소프트웨어에 액세스하기

차세대 엔지니어에게 힘을 실어주는 Ansys

학생들은 세계적 수준의 시뮬레이션 소프트웨어를 무료로 이용할 수 있습니다.

-

지금 바로 Ansys에 연결하십시오!

미래를 설계하기

시뮬레이션이 다음 혁신을 어떻게 지원할 수 있는지 알아보려면 Ansys와 연결하십시오.

국가

무료 트라이얼

제품 및 서비스

학습하기

회사 정보

Back

제품 및 서비스

ANSYS BLOG

March 28, 2019

Designing and Validating Sensors for ADAS and Autonomous Vehicles in a Virtual World

Engineers are dreaming up a world without traffic accidents. According to General Motors’ Self-Driving Safety Report, autonomous vehicles could eliminate human driving errors — the main cause of 94 percent of traffic accidents. In other words, a shift toward autonomous vehicles and advanced driver-assistance systems (ADAS) could save millions of lives.

ANSYS VRXPERIENCE helps engineers validate ADAS and autonomous vehicles.

Enabling vehicles to be fully autonomous can be complicated. Car manufacturers must first prove the safety of their autonomous vehicles, ADAS and sensors. How can they do that? One method is to conduct billions of miles of physical road tests in complex driving environments and challenging weather scenarios. However, this option is expensive and risky. This method also makes it hard to ensure every edge scenario is adequately tested.

Seeking a solution that is faster, safer and more economical than physical testing, engineers are turning to Ansys Speos. This optical simulation solution expedites the positioning of sensors inside the autonomous vehicle by eliminating the need to build expensive and laborious prototypes. Speos sensor models can then be test-driven within Ansys VRXPERIENCE, a virtual reality (VR) tool that allows users to test, validate and experience the sensors’ performance in realistic driving conditions.

Designing ADAS and Autonomous Vehicle Sensors with Speos

Sensor integration will directly impact what the autonomous vehicle will perceive. Speos provides a tool set that enables engineers to assess and optimize sensor positioning and vehicle perception.

To simulate a sensor within Speos, engineers import their computer-aided design (CAD) geometry and the road environment. They then set up material definitions and optical properties for the car, environment and sensor.

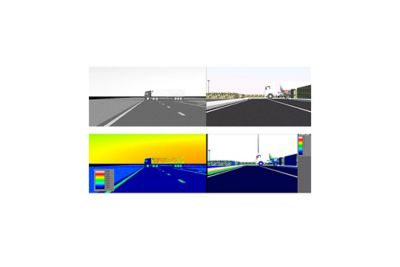

Speos is used to simulate what a sensor would capture when it

encounters a white truck and a bright sky.

A camera sensor’s characteristics are used to generate accurate images from the simulation. For example, the user will dictate the camera’s location, orientation, number of pixels, sensitivity, white balance and other factors.

To calculate the camera’s performance, engineers create a pixel grid that helps determine any mechanical interference. Various light sources are accounted for, including the sun, street lights and head lamps.

Once Speos has this data, engineers can generate the virtual images that were sent to the virtual camera. The engineers can then use different post-processing tools to evaluate the performance of the camera based on standardized automotive test.

Validating ADAS and Autonomous Vehicle Sensors with VRXPERIENCE

VRXPERIENCE enables engineers to create a virtual world that tests the limits of sensors, ADAS and autonomous vehicles.

Each virtual drive can be uniquely customized by the engineer, enabling them to select from a variety of driving scenarios and road conditions. This means that engineers can guarantee that the autonomous vehicles, ADAS and sensors are all tested on edge scenarios.

VRXPERIENCE enables engineers to test their autonomous vehicles, ADAS and sensors faster than if they tested them with physical prototypes.

Speos is used to simulate what a sensor would capture when it

encounters a white truck and a bright sky.

The tool also eliminates any risk that real-world tests would pose to other drivers and pedestrians.