-

-

Access Free Student Software

Ansys empowers the next generation of engineers

Students get free access to world-class simulation software.

-

Connect with Ansys Now!

Design your future

Connect with Ansys to explore how simulation can power your next breakthrough.

Countries & Regions

Free Trials

Products & Services

Learn

About

Back

Products & Services

Back

Learn

Ansys empowers the next generation of engineers

Students get free access to world-class simulation software.

Back

About

Design your future

Connect with Ansys to explore how simulation can power your next breakthrough.

Free Trials

ANSYS BLOG

June 15, 2020

How to Improve Product Sounds Using Acoustic Simulations

Engineers tend to spend a lot of time tweaking and simulating a design to optimize its look, feel and performance. However, they should not underestimate the sounds made by their products by addressing them late in the development cycle.

Engineers can design the best dishwasher in the world. But if its too loud, or too quiet, people may not think it’s working properly.

The perceived quality that sound gives a product can be positive as well. We all wait in anticipation for the pop of a toaster, the snap of a seat belt or the click of a connector. In fact, these sounds are so satisfying that it’s common to hear them in commercials.

On the other hand, a bad sound could be disastrous to a product. Imagine a ceiling fan that cooled a room in seconds but sounded like a helicopter — no one would buy it.

Since sounds can impact product sales, it could be problematic if engineering teams use the traditional method of waiting until physical prototyping to listen to their design for the first time. At this stage of the development cycle, it could be too costly to fix the problem.

What if you could just listen to your product’s sound during the design stage of development? Yes, you can by using acoustic simulations. In fact, companies have started to create whole departments that focus on optimizing product sounds.

Without the satisfying snap, people would wonder if their seatbelt was working properly. With acoustic simulations, engineers can ensure the product sounds optimal.

The Science Behind Acoustic Simulations

Before we dig into how to listen to a simulation, let’s discuss where these sounds come from.

Products that contain vibrating parts, impacting components, fluid flows and electromagnetic fields can all produce sound by vibrating the air, or medium, around them. So, to simulate the noises that products make, you must first simulate their functions.

For instance, to simulate the sound inside a car, you must model the wind that brushes past it and the vibroacoustic behavior of the powertrain. Even the sounds of an air conditioning system are important for quieter electric vehicles (EV).

Once the product operations are modeled, all of these noises can be identified and studied for their sound level and quality. The levels can be calculated in decibels, a unit of sound that measures its pressure, power and intensity.

However, it’s harder and subjective to assess sound quality. Psychoacoustic indicators like loudness, sharpness, roughness, tonality and fluctuation strength can estimate the sound perception. However, to get an accurate rating of sound quality, engineers need to conduct listening tests with groups of people and statistical analysis. As sound quality is subjective, the best way to judge it is for each person in the test to listen to it.

That is why acoustic simulations are so important: They can help design the functional aspect of a product and its sound as well.

How to Listen to an Acoustic Simulation

Let’s say you want to assess the noise of an electric vehicle. The major components of the powertrain that contribute to the product’s sound are the motor and gearbox. So, to assess the sounds you first use multiphysics workflows that simulate these systems.

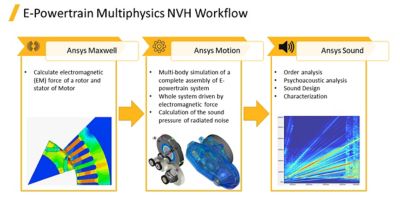

The acoustic simulation workflow to model the sounds from an electric vehicle powertrain.

The motor electromagnetics can be simulated using Ansys Maxwell and Ansys Mechanical while the gearbox motion can be simulated using Ansys Motion. The information from these models can then be sent to Ansys Sound so engineers can listen to the product. From there, they use these simulations to tweak the design’s performance and its sounds.

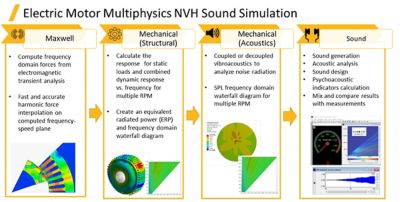

As the engineers learn more about their designs, they can expand the simulation to dig into the sound sources from the electric motor itself. This multiphysics workflow could include the structural analysis of static loads, electromagnetic forces, vibration analysis and acoustic noise radiation. To learn more about this workflow, watch: Listen to Your Simulations with Ansys.

The acoustic simulation workflow to model the sounds from an electric motor.

What Other Sounds Can Engineers Simulate?

Motor and vehicle sounds aren’t the only noises engineers need to optimize. As a result, Ansys offers multiphysics simulation workflows that can be used to model the noises of various products.

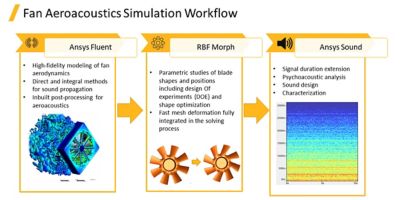

For instance, fans, compressors, pumps and blowers can be simulated using Ansys Fluent. The CFD software can be used to perform an aeroacoustics simulation that takes into account blade performance and turbulence. This data can then be passed onto Sound to get a 3D sound rendering for people to listen to. Then engineers can assess and optimize the sound quality based on this virtual model.

The acoustic simulation workflow of a fan.

Many rotating machines need to adhere to aeroacoustics standards. With acoustic simulations, engineers have a cost-effective way to generate the noise of their designs and ensure they are appropriate.

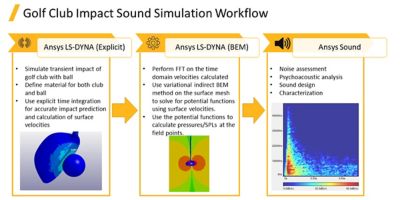

Designing the sounds of consumer goods is just as important as designing them for industrial products. For instance, no one wants a silent golf club. They want the satisfying snap of impacting the ball without the thud if they hit the dirt.

So, depending on the context, the sounds of impacts can be both desirable and undesirable. By simulating the products, engineers can minimize undesired sound and enhance the ones they want to embellish.

The acoustic simulation workflow of a golf club.

For golf clubs, the sound will depend on the impact’s velocity and shape. But it’s also dependent on the materials of the impacting objects and the media they are in. This can be a challenge to physically test and manually design, but with Ansys LS-DYNA and Sound, engineers can ensure that a golf club has a good smack when it contacts the ball and is nearly silent when it hits the ground.