-

-

Access Free Student Software

Ansys empowers the next generation of engineers

Students get free access to world-class simulation software.

-

Connect with Ansys Now!

Design your future

Connect with Ansys to explore how simulation can power your next breakthrough.

Countries & Regions

Free Trials

Products & Services

Learn

About

Back

Products & Services

Back

Learn

Ansys empowers the next generation of engineers

Students get free access to world-class simulation software.

Back

About

Design your future

Connect with Ansys to explore how simulation can power your next breakthrough.

Free Trials

ANSYS BLOG

February 14, 2020

Here Are the Benefits to Digitally Developing AR HUD

Dirk Spiesswinkel is a product manager for EB GUIDE arware at Elektrobit.

When engineers incorporate augmented reality (AR) into head-up displays (HUDs) roads will become safer.

But as an added benefit, this technology will also help drivers trust in advanced driver assistance systems (ADAS) and autonomous vehicle (AV) technology.

An augmented reality head-up display informs a driver of road conditions.

What is an AR HUD?

AR HUD enables vehicles to communicate more information than a traditional dashboard. For instance, the system could indicate how the car interprets the environment, senses dangers, plans routes, communicates with other technologies and triggers ADAS.

There are three types of HUDs. The standard models, currently available, can project dashboard information in front of a windshield or in the driver’s field of view. As a result, people gain useful knowledge about the road and vehicle conditions without shifting their eyes from traffic.

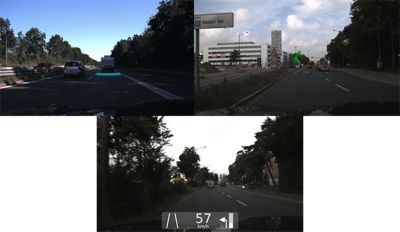

A contact analogous AR marker highlighting the leading vehicle (top, left). A display with an arrow animation shows the driver that a turn is coming up (top, right). A display that interacts with its environment by displaying the distance until the driver’s next left turn (bottom).

In the future, advanced AR HUD will project complex graphics that correspond to objects in the real world. For instance, on a foggy night, when the car’s thermal sensors detect an animal or human, they could highlight their presence to the driver. This way, even if a human eye can’t see the person through the fog, the driver can still react.

The common building blocks of all of these display systems are:

- A data acquisition system comprised of sensors and engine control units (ECU)

- A data processing system that assesses which information should be displayed and how to visualize it

- A display system

Simple display systems could consist a series of static icons or graphics on a windshield. More complex display systems will display contextual animations to the driver. Finally, full AR display systems will integrate and adapt with the driver’s environment.

How Will AR HUD Help Drivers Ease into Autonomous Vehicle Technology?

As ADAS systems take more and more control over the car, a HUD can increase drivers’ understanding of these systems. As humans start to notice that they need to take over the wheel less often, they will start to gain confidence in self-driving cars and the technologies that enable them.

As drivers start to see that their car is better at sensing danger than they are, they will start to trust ADAS and AV systems.

The challenge is that AR HUDs will be difficult — or potentially dangerous — to design, test and validate in the real-world while there is a human-in-the-loop. As a result, virtual prototyping and development will play a key part in reducing this technology’s time to market.

How to Develop HUD Systems

Traditional HUD development focuses on producing a clear image that doesn’t distract the driver. This means that the design must take into consideration its integration into the car and positioning relative to the driver.

It isn’t easy to predict what optical effects could pop-up during the design cycle. Additionally, building physical prototypes could get costly and push development late in the car’s design cycle.

To develop this HUD system, engineers need to ensure it will look clear to the driver.

Therefore, engineers can use Ansys Speos to virtually address optical challenges of these displays. Using this method, defects that could be prevented early in development include:

- Dynamic distortion

- Fuzzy images

- Ghosting

- Vignetting

- Stray light

Adding AR to the display makes it more challenging to test and validate. The system needs to be tested dynamically to ensure it properly interacts with the environment. For instance, engineers need to ensure that it registers the surrounding traffic elements and quickly displays relevant information based on these inputs. As a result, the user experience (UX) and user interface (UI) of these systems have all of the optical challenges of a classic display with the added challenges of lag.

Therefore, the AR system needs to be tested on the road, which means that it will encounter all of the validation complexities of designing ADAS and AV systems. Namely, it’s hard to control physical environments safely and practically. For instance, if the system is tested on the road, it may not experience all of the scenarios that could trigger potential defects.

The answer is for engineers to simulate the traffic and driving scenarios so they can assess the AR HUD in all perceivable scenarios, variables and edge cases without risking the safety of test drivers or people on the roads.

The Benefits of Virtually Testing AR HUD

Engineers will notice other benefits from testing their display systems using simulation. For instance, it enables them to take the UX and UI into consideration early in development.

Engineers are assessing an AR HUD using virtual reality.

The display designs will often be constrained by the development of the car’s windshield and dashboard. Therefore, by inputting these geometries into a virtual reality (VR), engineers can assess how these constraints affect the look and feel of the system. As the geometries change throughout development, it doesn’t take engineers long to assess how they affect the display.

Using simulation, engineers get an early sense of how the HUD:

- Affects the field of view

- Distracts the driver

- Reacts to latency, brightness and movement

- Displays information

- Affects the driver’s response to new information, safety warnings and edge cases

How to Virtually Test AR HUD System

The first stage of virtual testing the display is to have a prototype of its UI/UX software. Engineers use EB GUIDE arware from Elektrobit to create the AR content and embedded software for the HUD system.

Then, engineers use Ansys VRXPERIENCE to create a real-time physics-based lighting simulation that models how the content will be displayed. The simulation can also test how sensors perceive the world to ensure the data acquisition system is working properly.

The simulation displays how a HUD system could highlight the lane, people on the street and the car ahead.

Next, Ansys VRXPERIENCE HMI lets engineers experience their HUD designs within an immersive digital reality environment.

The embedded software could then be added into the test and validation loop so engineers virtually design, assess and test an augmented reality HUD prototype in real-life driving conditions.

For instance, engineers can use this setup to see how sensor filtering can affect the performance of the AR HUD system. Because of how humans perceive movement, AR systems will need a higher frequency of data collection than ADAS systems. Simulations can test if the vehicle motion tracking is sufficient enough for the system to align its graphics to the real world and human vision.

EB GUIDE arware and VRXPERIENCE provide a simulation toolkit to support the whole design process of a HUD. With it, they can test and validate prototypes. To learn more, watch the webinar: Virtual Development of Augmented Reality HUD Solutions or its repeat session.

Any and all ANSYS, Inc. brand, product, service and feature names, logos and slogans such as Ansys, Ansys Speos and Ansys VRXPERIENCE are registered trademarks or trademarks of ANSYS, Inc. or its subsidiaries in the United States or other countries.