原始设备制造商(OEM)和供应商正在潜心研究、不懈努力推进自动驾驶技术。要想获得足够的推动力,以从L2级升级到L3级(可移开视线)甚至更高自动驾驶级别,关键在于运行设计域(ODD)的演进发展(ODD是使自动驾驶汽车能够安全运行的一组运行条件)。

为了实现驾驶员可将视线离开道路的自动驾驶水平,汽车行业必须证明系统的安全性符合预期功能安全(SOTIF)以及自动驾驶安全优先(SAFAD)的要求。SOTIF(ISO 21448)和SAFAD(ISO/TR 4804)都强调了自动驾驶汽车安全验证的重要性,首先是在HIL测试中进行验证,然后是针对选定的用例,在实际道路测试中进行验证。

随着ODD的不断发展和扩展,在数百万种场景和难以记录的边缘案例下测试车辆感知性能变得更加关键。为了解决这些新的感知挑战,市场正在转向采用高分辨率摄像头(800万像素及以上)、高分辨率成像毫米波雷达和高分辨率激光雷达。此外,扩展的ODD验证覆盖范围也使仿真变得更加重要。这些解决方案可生成大量数据,以便在仿真和最终车辆中进行传输和处理。

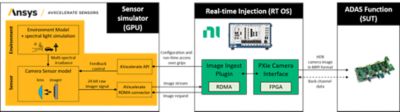

2024 R1版Ansys AVxcelerate Sensors自动驾驶汽车传感器仿真软件包含了一项重要的增强功能,可用于在结合仿真系统与真实系统的硬件在环环境中进行感知软件测试。这种基于物理的解决方案现在与NI提供的基于RoCE(基于融合以太网的远程直接内存访问)的HIL基础架构相兼容,以支持验证更高级别感知所需的大规模、实时和低时延数据交换。由于Ansys摄像头仿真已经在生产环境中与芯片上的工业级感知软件配合使用,因此RDMA兼容性将进一步提高该解决方案的实用性。

使用合成仿真数据的HIL工作台测试将优先考虑安全合规性

由于紧邻生产环节,复杂软件系统的测试和验证将不可避免地包括HIL工作台测试。HIL测试台汇总了来自摄像头、激光雷达和雷达等各种传感器的合成仿真数据输入,能够更准确地评估自动驾驶汽车(AV)系统。

利用HIL测试,可以解决系统验证中最具挑战性的方面:基于经过数据训练的深度神经网络来评估传感器感知功能。该测试是研发过程中的宝贵工具,因为它是距离大规模生产最近的前置步骤。HIL测试中出现的任何问题,都可以通过在此阶段负责控制特定功能的电子控制单元(ECU)或嵌入式系统来解决。

由于感知算法的复杂性,我们无法依靠以理论分析为主的传统验证方法来验证,也无法依赖像OTA摄像头和显示器硬件在环等已显示出局限性的测试方式。相反,感知算法必须根据大量合成仿真数据进行测试。此时,就需要通过仿真进行直接数据注入,以确保注入数据在传输过程中保持质量,同时还无需用摄像头进行监控校准。

通过仿真进行直接数据注入可提高工作台测试的准确性

如今,高分辨率传感器为HIL工作台带来了技术限制,而这一挑战,与通过HDMI或DisplayPort将合成视频数据传输到HIL工作台的传统方法有关。这些端口造成了全高清分辨率和数据传输限制,无法满足自动驾驶环境对实时响应率的需求。

基于仿真原始信号的直接数据注入,则提供了一种可行的解决方案,可实现在HIL工作台上测试感知功能。用于测试摄像头芯片的传统HIL技术,例如OTA摄像头捕捉技术,不允许工程师保存真实摄像头图像的高动态或对其进行仿真,尤其是针对夜间驾驶等场景。由于缺少高动态范围,摄像头的图像信号处理(ISP)无法得到适当的激励,因此来自芯片的反向信道数据无法整合到仿真循环中。

利用直接数据注入,可对传感器的环境和硬件/光学方面进行全面仿真,而且仅将原始信号注入到处理硬件和软件的最后部分,这样可实现更准确的HIL工作台测试和AV感知验证。利用原始数据注入,芯片及其图像信号处理器(ISP)会受到相关数据的激励。这样,整体行为与现实一致,并且可以在仿真循环中考虑反向信道数据。这意味着可以使用其原始系列固件对ECU进行测试,而无需在测试前将其设置为特殊的“HIL模式”。

对于高分辨率摄像头或多传感器仿真,大量占用CPU资源的数据传输通常是妨碍HIL工作台实现绝对实时性要求的一大挑战。不过,AVxcelerate 2024 R1版本中,包含了NI RDMA传输功能,能够应对这一挑战并促进数据的顺畅传输。

Ansys AVxcelerate Sensors Software + NI RDMA

Ansys与NI(现为爱默生旗下公司)正在合作为HIL验证提供实时、物理逼真的高分辨率摄像头合成数据,以解决测试限制问题。为此,他们开发了一种由NI RDMA和Ansys AVxcelerate Sensors软件提供支持的闭环仿真方案,使客户能够通过NI实时硬件摄像头接口板将实际仿真数据直接注入受测器件(DUT)的输入端口。为了评估受测ECU的相关行为,必须注入准确的合成数据,而这就是需要物理精确仿真的主要原因。AVxcelerate软件基于物理的高保真度仿真,有助于在完全动态的24位原始数据图像中保存完整的场景信息。因此,成像器光谱范围自适应、HDR成像器/DSP仿真和多曝光感知策略都能够加以应用。

在AVxcelerate Sensors应用中,可以实时生成图像子集,以获得快速、可验证的结果。与使用传统仿真技术相比,Ansys软件可在极短的时间内实现经过验证的摄像头计算机视觉(CV)。

NI和Ansys通过基于融合以太网数据传输的RDMA进行闭环仿真

NI RDMA是这个闭环系统的一部分,它能够以低时延和高带宽传输大量合成数据,并实时托管高分辨率摄像头馈送。本质上,NI RDMA驱动软件支持两个或多个系统使用RDMA技术(RoCE)通过融合以太网交换数据。它提取了RDMA兼容接口编程的低层细节,并具有简单高效的应用编程接口(API)来传输数据。NI还通过开发软件开发套件(SDK)进一步扩展了这些功能,该套件可与遵循相同开放性和系统兼容性方法的仿真环境实现简单、快速以及与供应商无关的连接。

让自动驾驶感知更清晰的强大组合

Ansys AVxcelerate Sensors仿真软件、NI硬件和基于融合以太网链路的RDMA可创建与物理世界的连接或环路,使OEM厂商能够在实际车辆上放置传感器,以进行进一步测试和验证。通过这种闭环解决方案,客户可以将虚拟原型如何与运行感知算法的硬件和实时系统进行交互可视化,并进行分析与验证。该解决方案增强了测试环境和接口功能,包含完整的操作系统、应用堆栈和硬件,并且,还可以扩展以重放测试,通过测试感知算法和充分激励高级驾驶辅助系统(ADAS)ECU,将仿真数据与记录的真实世界数据进行比较和对比。

Ansys与NI的合作有助于提升车辆感知技术的精准度,帮助汽车企业在研发过程中快速、安全地掌握相关技术,从而获得显著优势。

除了摄像头以外,Ansys和NI还在为ADAS和AV领域的其他传感器(包括雷达和激光雷达技术)开发类似的闭环解决方案。您可以在此处阅读关于合作伙伴关系的更多信息。

Advantage博客

Ansys博客由Ansys内部和外部专家撰写,让您随时了解最新的行业信息,其中囊括了工程文章和仿真新闻、前沿洞见和行业趋势、产品开发进展、Ansys解决方案使用技巧以及客户案例。