-

-

Accédez au logiciel étudiant gratuit

Ansys donne les moyens à la prochaine génération d'ingénieurs

Les étudiants ont accès gratuitement à un logiciel de simulation de classe mondiale.

-

Connectez-vous avec Ansys maintenant !

Concevez votre avenir

Connectez-vous à Ansys pour découvrir comment la simulation peut alimenter votre prochaine percée.

Pays et régions

Espace client

Support

Communautés partenaires

Contacter le service commercial

Pour les États-Unis et le Canada

S'inscrire

Essais gratuits

Produits & Services

Apprendre

À propos d'Ansys

Back

Produits & Services

Back

Apprendre

Ansys donne les moyens à la prochaine génération d'ingénieurs

Les étudiants ont accès gratuitement à un logiciel de simulation de classe mondiale.

Back

À propos d'Ansys

Concevez votre avenir

Connectez-vous à Ansys pour découvrir comment la simulation peut alimenter votre prochaine percée.

Espace client

Support

Communautés partenaires

Contacter le service commercial

Pour les États-Unis et le Canada

S'inscrire

Essais gratuits

ANSYS BLOG

July 13, 2023

Psychoacoustics: Understanding the Listening Experience

We all don’t experience sound the same way. Our bodies, the environment, and cognitive context all feed into how we interpret the meaning of the sounds around us. Is it a sound we recognize? Is the sound surprising or expected? Is it congruent with what our other senses are telling us? Because what we hear is influenced so much by what we think we hear, any conversation about human hearing should include the study of sound perception — or psychoacoustics.

What is Psychoacoustics?

Psychoacoustics is the study of how humans perceive sound. It’s a relatively young field that began in the late 1800s to help aid in the development of communications. Psychoacoustics combines the physiology of sound — how our bodies receive sound — with the psychology of sound, or how our brains interpret sound. Together, these sciences help us understand how and why sounds affect people differently.

The Physiology of Hearing

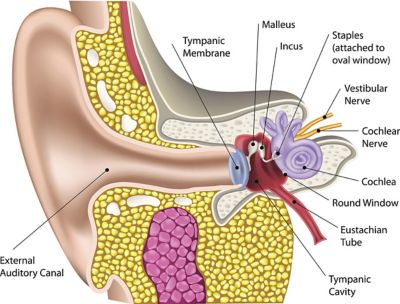

From the shape of our outer ears to the coil of our cochlea, even the resonant properties of our skulls — there are many physical attributes that affect the way sound waves move through our bodies and into our brains. Even as we age, our ability to process different frequencies changes, meaning that what we hear can be slightly different throughout our lives.

The anatomy of the human ear.

In general, humans can only hear a small portion of the audible spectrum. The human hearing range includes the frequency range from about 20 Hz to 20 kHz, which is ideal for speech recognition and listening to music, but not so great for very high- or low-frequency sounds such as volcanic eruptions.

Even the best built ears among us can’t hear infrasounds (noises below 20 Hz) or ultrasounds (noises above 20 kHz). While some animals like elephants, bats, and dolphins can hear a wider range of sound frequencies, much of our world’s clamor is silent to humans because it falls outside the hearing threshold that our physiology allows.

Physiological factors that can affect sound perception include:

- Ear shape and size

- Bone density

- Body weight

The Psychology of Hearing

While physical differences affect what we hear, our brains decide how we hear it. Our ears aren’t precise machines, so we use our cognitive powers to compensate for the subjectivity of sound. Our brains have the important duty of figuring out what a sound means, factoring in both immediate context and personal experience to decode the source and intent.

Just like all other animals, we use hearing as a primary warning system: Should we run or should we laugh? This has less to do with the actual sound and more about how our brains interpret it. For example, if we are in a busy restaurant and hear a glass break, we assume a server dropped a drink. Not scary. However, if the same sound wakes us from sleep, our brains go into fight-or-flight mode. Context of a sound is one of many factors that are considered in psychoacoustics.

If you read the word green printed in red ink, the visual incongruence might give your mind a second of pause. This context bias can trick our brains through sound, too. Our minds are constantly working to identify sounds and rely on visual and environmental cues to help us process audio information efficiently. Inside our own heads, the perception of a sound is our reality, even if it’s not always accurate.

Auditory Illusions

These examples show how context can influence our brain’s interpretation of what we hear, which can even stimulate an emotional response.

- Does the pitch go up or down?

- Context and audition experiment by Daniel Pressnitzer

- Context and audition experiment by Daniel Pressnitzer

- What name do you hear?

- Yanny or Laurel experiment by Brad Story

- Can you trust your ears?

- Audio illusions by ASAPScience

- The sound illusion in intense movies

- Shepard tone by Vox

- Shepard tone by Vox

Does It Sound Good or Bad?

A key subjectivity in how humans perceive sound is whether a sound is considered good or bad. In addition to our own personal experiences and preferences, a variety of different aspects help us determine if a sound is annoying or pleasant. Together, these psychoacoustic indicators help us assign opinions to sounds.

Psychoacoustic Indicators

- Pitch

- Loudness

- Sharpness

- Tonality

- Duration

- Roughness

What makes a sound “good” or not depends on how your brain interprets these indicators. For example, while G# is always the same note, hearing it played on a flute is very different than hearing it emitted from a smoke alarm. The psychoacoustic indicators received from the flute are interpreted by your brain as soothing, while the same note from the siren is perceived as threatening.

Applications for Psychoacoustics

So much of our world is experienced through sound, it’s not surprising that psychoacoustics is an important tool used across many industries:

- Music and film production

- Automotive performance

- Building and environmental design

- Communications devices

- Community alert systems

Engineers across fields rely on psychoacoustics to inform them of how sound will affect the way their designs are experienced. While an automotive engineer uses psychoacoustics to increase the thrill of accelerating in a sports car, a civil engineer will use it to understand how highway noise might impact the enjoyment of visitors at a nearby park.

How are Psychoacoustics Calculated?

The human brain is incredibly hard to measure. While you can objectively observe physical and chemical changes in the brain, modeling perception is not as clear. The study of sound perception relies on psychoacoustic experimentations in which listeners are asked to evaluate different aspects of a sound, such as pitch and timbre. To quantify these results, a statistical approach to the analysis aims to propose an accurate modeling of sound perception.

Engineering software like Ansys Sound uses mathematical and computational techniques to simulate the processing of sound in the human brain. While they can’t account for all the nuances of perception, these models can help predict how sounds will be experienced under different conditions and identify factors like auditory masking, equipping engineers with valuable insights for optimizing their designs.

Sound Localization

How our brains identify a sound’s position of origin within our environment is called sound localization. Is it behind or in front of us? Is it high or low? For example, in a movie, sound localization tells us which direction a dinosaur is going to attack from. It’s also helpful in detecting an approaching bicycle on a trail or knowing when to pull over for an emergency vehicle.

Psychoacoustics is important to sound localization, as it provides insights into how the acoustic cues we receive should be interpreted. Does the bicycle have enough room on the trail, or do we need to step to one side? Is the emergency vehicle getting closer or moving away from us? To answer these location questions, our brains process and compare information gathered from both ears, including time and intensity.

To experience sound localization, put your headphones on and take a seat at this virtual barbershop by QSound Lab.

Giving Meaning to Sound

The study of psychoacoustics allows humans to understand what they hear. Through the powerful combination of our auditory system and cognitive abilities, we can not only receive the sounds around us, but also assign meaning to them, helping us to stay safe, communicate, and enjoy the never-ending concert of our world.

Learn more about important role that sound plays in product design considerations by viewing our webinar “Listen to Your Simulations with Ansys.”