-

-

Accédez au logiciel étudiant gratuit

Ansys donne les moyens à la prochaine génération d'ingénieurs

Les étudiants ont accès gratuitement à un logiciel de simulation de classe mondiale.

-

Connectez-vous avec Ansys maintenant !

Concevez votre avenir

Connectez-vous à Ansys pour découvrir comment la simulation peut alimenter votre prochaine percée.

Pays et régions

Espace client

Support

Communautés partenaires

Contacter le service commercial

Pour les États-Unis et le Canada

S'inscrire

Essais gratuits

Produits & Services

Apprendre

À propos d'Ansys

Back

Produits & Services

Back

Apprendre

Ansys donne les moyens à la prochaine génération d'ingénieurs

Les étudiants ont accès gratuitement à un logiciel de simulation de classe mondiale.

Back

À propos d'Ansys

Concevez votre avenir

Connectez-vous à Ansys pour découvrir comment la simulation peut alimenter votre prochaine percée.

Espace client

Support

Communautés partenaires

Contacter le service commercial

Pour les États-Unis et le Canada

S'inscrire

Essais gratuits

At dinner with a few engineers and product leaders, someone asked, “Is AI actually useful in simulation, or is it just the trend of the decade?” Short answer: AI isn’t a stunt. It’s steadily influencing how we simulate, how quickly we learn, and how confidently we decide. The headlines focus on chatbots, but the real action is inside solvers, across workflows, and in how teams plan experiments.

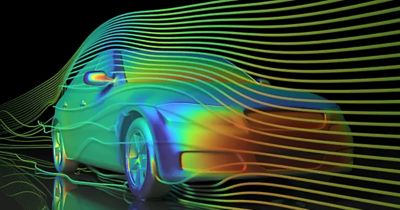

Why Simulation Matters – and Where AI Fits

Simulation helps us “see” the invisible — stress hot spots in a bracket, airflow over a wing, microscale effects that shape macro performance. Classic high-fidelity finite element method (FEM) and computational fluid dynamics (CFD) remain trusted for accuracy, but they can be compute-intensive and expertise-heavy. Artificial intelligence (AI) is not replacing physics; AI is augmenting it.

Think of AI as a set of accelerators and co-pilots that may:

- reduce numerical effort

- streamline repetitive setup and reporting work

- widen the design space you can explore within typical budgets and timelines – when validated for the specific use case

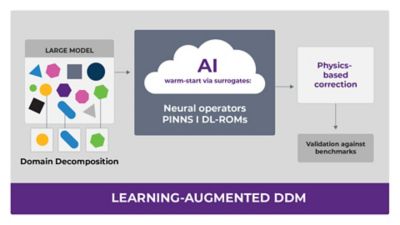

Faster Solves Without Giving Up Robustness: AI + Domain Decomposition

In large models, domain decomposition methods (DDMs) split a big problem into subproblems that run in parallel and then stitch results together. A modern AI-inspired twist is learning-augmented DDM, in which deep-learning models learn patterns in subdomains and across interfaces so the solver can start closer to a solution and potentially converge in fewer iterations. A subsequent physics-based correction step is typically applied, which can help preserve numerical robustness and reduce wall time for suitable problems. Actual benefits are problem-dependent and should be confirmed against trusted benchmarks.

Artificial intelligence (AI) + domain decomposition to speed up simulation runs

Why should executives care? Mechanical, aerospace, energy, and semiconductor models keep growing. An approach that reduces iterations without rewriting core solvers can mean shorter queues, faster turnarounds on late-stage changes, and less pressure to over-provision clusters — subject to validation and governance.

Surrogates That Generalize: Neural Operators, PINNs, and Deep-Learning ROMs

Three families of surrogates are moving from papers to practice in some settings:

- Neural operators learn a mapping from inputs (geometry, boundary conditions, material parameters) to full solution fields. The Fourier neural operator (FNO) is a widely studied example. When properly trained and validated in its domain of applicability, such surrogates can enable near-interactive “what-ifs” without re-running a full high-fidelity solve.

- Physics-informed neural networks (PINNs) incorporate governing-equation residuals directly in the loss function. They are often explored in cases where data is sparse or noisy and for inverse problems — inferring parameters that are difficult to measure.

- Deep-learning reduced-order models (DL-ROMs) learn low-dimensional manifolds and their dynamics, enabling near-real-time predictions for parametric studies. They avoid intrusive code changes and pair well with classical proper orthogonal decomposition (POD) intuition.

Surrogates don’t replace your baseline “truth” model; they front-load learning. You may run the faster surrogate to reach directionally useful conclusions, then reserve high-fidelity cycles for a short list of candidates. Always document training data, limits of applicability, and validation results.

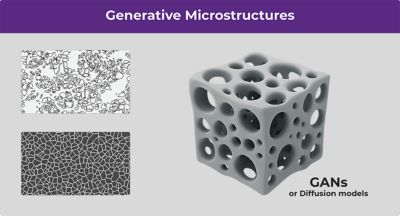

Materials and Biomechanics: Synthetic Microstructures at Scale

A recurring bottleneck in multiscale modeling is data scarcity. Generative models — generative adversarial networks (GANs) and diffusion models — can produce realistic 2D/3D microstructures (for example, bone, porous media, and composites) that match target statistics reported in the literature. Teams use these as inputs to homogenization or FE² workflows to explore structure-property-process trade-offs without relying solely on new scans or destructive tests. As with any synthesized data, traceability, representativeness, and validation are essential.

Leaders’ Takeaways: AI + Simulation

Why this matters

AI is not a substitute for physics-based modeling. Used correctly, it accelerates established workflows and improves the quality and traceability of decisions.

- Faster time to decision. Learning-augmented domain decomposition and surrogate warm-starts reduce solver iterations and queue time, allowing more “what-ifs” in the same wall clock time.

- Lower cost per insight. Use reduced-order models and AI-based surrogates to screen the design space; promote only the most promising candidates to high-fidelity FEM/CFD.

- Higher confidence, not just speed. Hybrid approaches keep physics in the loop, retrieval-augmented generation (RAG) ensures that written outputs cite approved standards, and basic uncertainty quantification reports ranges rather than single values.

- Scalability becomes routine. Cloud or high-performance computing (HPC) with AI-assisted orchestration lets you scale when the business needs answers.

- Scalability on demand. Cloud/HPC with AI-assisted orchestration enables bursts of parametric studies without persistent over-provisioning.

Pragmatic first steps

- Target one workflow with clear pain (for example, recurring CFD with meshing bottlenecks), and define success (for instance, -30% wall clock, +3X variants explored).

- Pilot a surrogate on a “golden” historical dataset, and document its training data, limits of applicability, promotion rules to high fidelity, and benchmark results.

- Introduce an LLM + RAG assistant restricted to vetted playbooks/templates; require engineer review and sign-off on all generated plans and reports.

Governance essentials

Version your data and models, cite sources, restrict sensitive access, and route to human review when confidence is low.

Illustrative examples of generative microstructures synthesized using generative adversarial networks (GANs) and diffusion models

Why it matters: Larger, better-curated libraries can enable smarter screening. You can discover non-obvious material configurations earlier, reduce imaging or lab time, and focus physical experiments where they’re most relevant to making decisions.

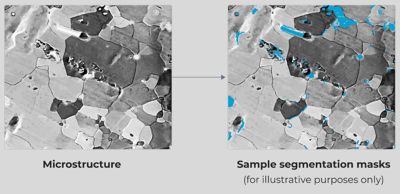

Segmentation: Turning Pixels and Point Clouds Into Simulation-Ready Geometry

Before any solver runs, teams need clean, labeled geometry. Segmentation is the step that converts raw data — CT/MRI volumes, micro-CT of materials, optical images, or lidar/point clouds — into regions a solver understands (organs versus vessels, phases in a composite, defects versus base material, components in an assembly). Done well, it reduces manual cleanup, speeds meshing, and makes boundary conditions and material assignments repeatable.

Illustration of the segmentation process to label intricate regions in material microstructures for subsequent material property assignment

Where AI helps:

- Supervised 2D/3D models (for instance, U-Net-style encoders/decoders) can learn to delineate structures from labeled examples.

- Label-efficient approaches (weak supervision, few labels, self-supervised pretraining) can reduce annotation burdens when ground truth is scarce.

- Promptable segmentation models let experts guide the mask with clicks/boxes, trading “perfect automation” for fast, reviewable results.

Workflow considerations with AI-assisted segmentation:

- Mask quality assurance (QA) and uncertainty. Use simple checks (connectivity, topology, volume bounds) and model-uncertainty estimates. When uncertainty is high, escalate to human review.

- Geometry repair and meshing. Convert masks to watertight surfaces, smooth where appropriate, and ensure conformal interfaces for multimaterial meshes.

- Propagation of uncertainty. When segmentation is ambiguous, carry that through simulation with ranges (for example, material fraction bands) rather than a single-point mask.

Using AI in the segmentation stage can provide faster, more consistent preprocessing; fewer late-stage surprises from mislabeled regions; and clearer traceability for audits, manufacturing quality, or clinical review. As always, results are problem-dependent and should be validated against trusted benchmarks before being widely adopted.

LLMs in the Product Workflow: From Setup to Sign-Off

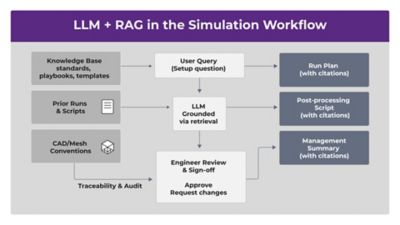

A surprising amount of engineering time lives in the process around simulation — geometry cleanup, meshing notes, convergence troubleshooting, result summaries, and compliance reports. Large language models (LLMs) paired with retrieval-augmented generation (RAG) can assist with the glue work, such as answering setup questions with references to your internal standards, drafting run plans and postprocessing scripts, and turning raw plots into management-ready narratives.

Large language model (LLM) + retrieval-augmented generation (RAG) to streamline the simulation workflow

Two principles keep this approach useful and controlled:

- Grounding beats guessing. Point models at a vetted knowledge base (playbooks, manuals, approved templates) so answers are consistent and citable.

- Human-in-the-loop review. Treat outputs as drafts. Engineers approve changes, ensuring alignment with methods and compliance requirements.

Differentiable Simulation: Gradients as a Feature

Differentiable simulation frameworks bring automatic differentiation to physics kernels, enabling shape and topology optimization, control tuning, and materials calibration with direct gradients. This does not replace classic adjoint methods across the board; rather, it broadens access where adjoints are difficult to implement. As always, applicability and performance should be evaluated on a per-problem basis.

From a business standpoint, differential simulation frameworks result in fewer black-box optimizations and more principled trade-offs, with faster convergence toward designs that balance performance, cost, and manufacturability — when supported by rigorous verification and validation (V&V).

What You Might Be Overlooking (and Shouldn’t)

- Active design of experiments (DOE) and multifidelity optimization. Instead of sampling uniformly, Bayesian methods choose the next simulation expected to reduce uncertainty the most, mixing low-cost surrogates with select high-fidelity runs.

- Uncertainty quantification (UQ). Decision-makers benefit from ranges and confidence levels.

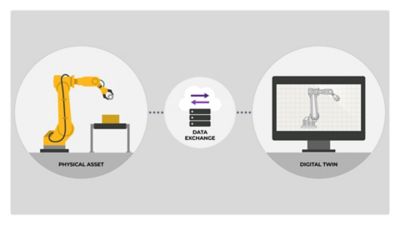

- Industrial digital twins. Combining sensors, simulation, and AI helps virtually test process changes before touching production.

Using digital twins to test process changes in industrial machinery before implementing production-level tweaks

The Advantage Blog

The Ansys Advantage blog, featuring contributions from Ansys and other technology experts, keeps you updated on how Ansys simulation is powering innovation that drives human advancement.