-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Kontakt -

Karriere -

Studierende und AkademikerInnen -

Für die Vereinigten Staaten und Kanada

+1 844,462 6797

THEMENDETAILS

Was ist ein Head-Up-Display (HUD) und wie funktioniert es?

Während der Fahrt buhlen Dutzende von Ablenkungen um Ihre Aufmerksamkeit – auf der Straße, aber auch im Fahrzeug. Der Tachometer. Der Tankfüllstand. Verkehrswarnungen und Fahrbedingungen. Wertvolle Informationen, um Ihr Fahrerlebnis zu verbessern. Aber um sie zu sehen, müssen Sie nach unten oder zur Seite blicken – weg von der Straße.

Was ist ein Head-Up-Display?

Ein Head-Up-Display (HUD) ist eine Form der Augmented Reality, die Informationen direkt in Ihrer Sichtlinie anzeigt, sodass Sie nicht wegschauen müssen, um sie zu sehen. Genau wie der Name schon sagt, hilft es den Fahrern, die Augen auf der Straße zu lassen – und den Kopf oben zu behalten.

Was sind Anwendungen für Head-Up-Displays?

Während das Fahren die am weitesten verbreitete Anwendung für Head-Up-Displays ist, gibt es viele weitere Anwendungsmöglichkeiten für die Technologie. Überall dort, wo ein Bediener gleichzeitig einen Blick auf die reale Welt und digitale Informationen benötigt, kann ein HUD helfen. Gelenkte Systeme wie Flugzeuge, Militärfahrzeuge und schwere Maschinen sind ideale Anwendungsfälle. In diesen Situationen werden die Informationen so projiziert, dass sie vom Bediener gesehen werden können, ohne die Straße, den Himmel oder die auszuführende Aufgabe aus dem Blick zu verlieren.

Eine weitere gängige Anwendung für HUDs sind Videospiele. Augmented-Reality-Headsets verwenden HUD-Technologie, um Spielern die Möglichkeit zu geben, durch das Spiel hindurch ihre physische Umgebung wahrzunehmen. Wenn sie auf diese Weise eingesetzt werden, schaffen sie eine gemischte Realität, in der das Spiel von Informationen über den Status des Spielers überlagert wird, z. B. Gesundheit, Wegführung und Spielstatistiken.

Der weltweite Einsatz der Telemedizin hat auch die Verbreitung von Head-up-Displays im Gesundheitswesen erhöht. Am Kopf montierte Displays und Smart-Brillen mit HUD-Technologie ermöglichen medizinischem Fachpersonal das freihändige Arbeiten. Sie finden sich in der klinischen Versorgung, Aus- und Weiterbildung, in der Zusammenarbeit mit dem Betreuungsteam und sogar in der KI-unterstützten Chirurgie.

Arten von Head-Up-Displays

Egal, ob Sie als Pilot den Flugverkehr im Auge behalten müssen, oder bei einem Videospiel den Rand des Couchtisches im Blick haben müssen, es gibt verschiedene Arten von Head-Up-Displays, die speziell auf die Anforderungen der Benutzer zugeschnitten sind. Viele Faktoren, wie die Umgebung, Kostenbeschränkungen und der Benutzerkomfort, spielen bei der Auswahl des richtigen HUD-Typs für die Anwendung eine Rolle.

Doch während HUD-Typen je nach Branche und Anwendungsfall unterschiedlich sein können, bestehen die meisten HUDs aus denselben drei Komponenten: einer Lichtquelle (z. B. einer LED), einem Reflektor (z. B. einer Windschutzscheibe, einem Combiner oder einer flachen Linse) und einem Vergrößerungssystem.

Alle HUDs verfügen über eine Lichtquelle (Picture Generation Unit) und eine Oberfläche, die das Bild reflektiert. (Meistens ist diese Oberfläche transparent, damit der Benutzer durch sie hindurchsehen kann). Zwischen der Lichtquelle und der reflektierenden Oberfläche befindet sich in der Regel ein optisches Vergrößerungssystem. Die Vergrößerungssysteme können sein:

- Ein oder mehrere Freiformspiegel, die das Bild vergrößern

- Ein Wellenleiter mit Gittern zur Vergrößerung des Bilds

- Ein Vergrößerungsglas (typischerweise bei HUDs für Flugzeuge)

- Nichts (einige HUDs haben keine Vergrößerung)

Vorteile von HUDs

Head-Up-Displays projizieren visuelle Informationen ins aktuelle Sichtfeld eines Benutzers. Dies bietet mehrere wichtige Vorteile:

- Es erhöht die Sicherheit durch verbesserte Fokussierung und Aufmerksamkeit

- Es priorisiert und filtert die relevantesten Informationen zum richtigen Zeitpunkt

- Es lindert die Anstrengung der Augen durch ständig wechselnden Fokus

- Es baut Vertrauen zwischen autonomen Fahrzeugen und Fahrern auf, indem es zeigt, dass System und Mensch dieselbe Realität haben

Wie funktioniert ein Head-Up-Display?

Wenn Sie die Taschenlampe Ihres Telefons auf ein Fenster richten, sehen Sie sowohl die Reflexion des Lichts als auch die Welt hinter dem Fenster. Ein Head-Up-Display erzeugt ein ähnliches Erlebnis, indem ein digitales Bild auf einer transparenten Oberfläche reflektiert wird. Dieses optische System liefert dem Benutzer die Informationen in vier Schritten.

- Bilderstellung: Das Picture Generation Unit verarbeitet Daten zu einem Bild.

- Lichtprojektion: Eine Lichtquelle projiziert das Bild dann auf die gewünschte Oberfläche

- Vergrößerung: Das Licht wird reflektiert oder gebrochen, um das Bild zu vergrößern

- Optische Kombination: Das digitale Bild wird auf der Combineroberfläche angezeigt, um es über die reale Ansicht zu legen

Konstruktion eines Head-up-Displays

Head-up-Displays sind von der menschlichen Wahrnehmung abhängig, wodurch Konstruktion und Prüfung sehr komplex sind. Technische Kennzahlen helfen Konstrukteuren, eine Spezifikation zu erfüllen, aber erst wenn sie verstehen, wie sich die menschliche Wahrnehmung auf den Prozess auswirkt, können sie erfolgreich sein.

Um die menschliche Komponente zu berücksichtigen, arbeiten HUD-Konstrukteure mit Simulationen. Durch digitale Tests und Validierung ihrer Modelle können sie viele Szenarien und technische Herausforderungen proaktiv bewältigen, bevor sie kostspielige physische Prototypen herstellen, bzw. sogar ganz ohne Prototypen auskommen. Diese Herausforderungen können sein:

- Geisterbilder, Verzerrungswert und dynamische Verzerrung

- Unterschiede in der Physiologie wie Kopfposition und Farbblindheit

- Farbverschiebungen durch beschichtete Windschutzscheiben oder polarisierte Gläser

- Kontrast, Lesbarkeit und Leuchtdichte der projizierten Bilder

- Sonneneinstrahlung beeinträchtigt die Lesbarkeit und die Sicherheit der Sicht

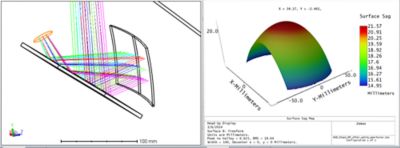

Beispiel: Simulieren der Sonnenreflexion

Mithilfe von Simulationen können Ingenieure vorhersagen, wie sich die Reflexion der Sonne in verschiedenen Szenarien auf die Lesbarkeit von HUD-Informationen auswirkt.

Die folgenden Simulationsergebnisse zeigen die Reflexion der Sonne im HUD auf den Spiegeln. Die vier Ergebnisse zeigen die gleiche Einstellung und Sonnenposition mit vier verschiedenen Materialien, die auf das HUD-Gehäuse aufgebracht wurden. Beachten Sie, dass bei jedem neuen Material die unerwünschte Reflexion weniger wahrnehmbar wird. Im letzten Bild werden Reflexionen vollständig vom Material absorbiert.

Ingenieure und Konstrukteure können erheblich profitieren, wenn sie optische Simulationstools wie Ansys Zemax OpticStudio und Ansys Speos verwenden, um die optische Leistung eines Systems zu simulieren und den endgültigen Leuchteffekt auf Grundlage der menschlichen Sicht zu bewerten.

Mit diesen Simulationstools können Sie

- visuelle Aspekte, Reflexion, Sichtbarkeit und Informationen zur Lesbarkeit in Bezug auf den jeweiligen menschlichen Betrachter bestimmen

- visuelle Vorhersagen auf Grundlage der physiologischen menschlichen Sehmodelle simulieren

- die visuelle Qualität durch die Optimierung von Farben, Kontrasten, Harmonie, Lichtgleichmäßigkeit und Intensität verbessern

- die Umgebungslichtbedingungen, einschließlich Tag- und Nachtsicht, berücksichtigen

HUD-Regentropfensimulation

In der Automobilindustrie kann Simulation Ingenieuren auch dabei helfen, zu sehen, wie ein HUD von einem Fahrer bei verschiedenen Wetterszenarien wahrgenommen wird. Dieses Video zeigt, wie eine Simulation die menschliche Wahrnehmung beim Betrachten des HUD in einem Regenschauer modelliert.

Fokus auf die Zukunft

Da die menschliche Leistung weiterhin von Daten abhängig ist, steht die Notwendigkeit, Informationen auf schnellste und sicherste Weise zu priorisieren und den Menschen bereitzustellen, an vorderster Front bei der Innovation. Zusätzlich zu den Entwicklungen im Automobilbereich kann HUD-Technologie Menschen dabei unterstützen, in einer Vielzahl von Branchen wie Gesundheitswesen, Infrastruktur und Kommunikation ihre Aufgaben zu erfüllen.

Zugehörige Ressourcen

Erfahren Sie, wie Ansys Ihnen helfen kann

Erfahren Sie, wie Ansys Ihnen helfen kann

Kontakt

Danke für die Kontaktaufnahme

Wir sind hier, um Ihre Fragen zu beantworten und freuen uns auf das Gespräch mit Ihnen. Ein*e Mitarbeiter*in unseres Ansys-Verkaufsteams wird sich in Kürze mit Ihnen in Verbindung setzen.